Devblog #1: Live Stats for Livestream (Redis caching, Twitch Helix, Websockets)

Deep dive into the tech behind Live Stats for Livestream

918 Words

2024-01-01 19:30 +0000 [Modified : 2024-01-01 19:35 +0000]

League of Legends does something I’ve always loved for esports: showing real-time stats of the game being played below the stream on their lolesports platform. This feature is something I’ve been wanting to replicate for Apex Legends for a long time, and I finally had the opportunity to implement it.

What is the goal of this system?

The primary aim is to provide Twitch viewers with real-time stats during a tournament, ensuring the data is concise enough not to overwhelm viewers yet informative enough to enhance their viewing experience. This includes displaying a live scoreboard, minimap, and player stats/inventory without distracting from the main stream.

This has a huge value for viewers that want to be engaged in the stream, allowing them to easily have a look at the players healing items, weapons or stats, being important factors in a competitive Battle Royale game.

The tech behind Live Stats for Livestream ("Shaw")

Quick overview

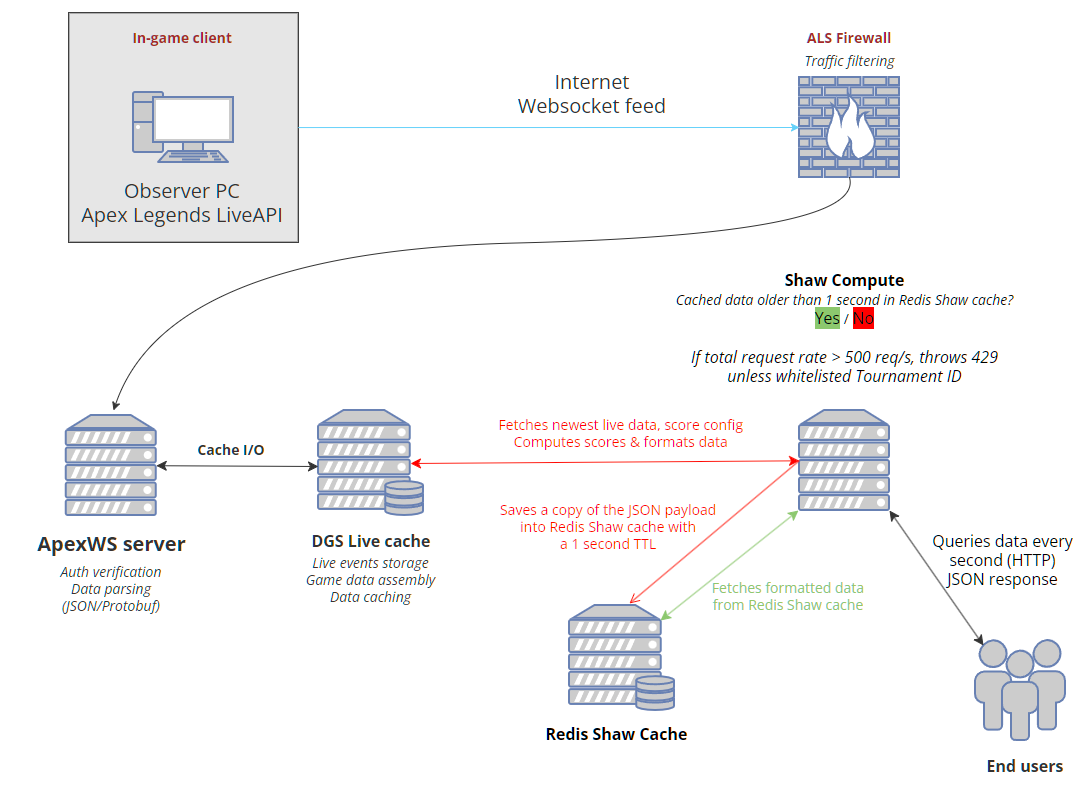

Live Stats for Livestream (code name Shaw, being the internal name of this project - a Person of Interest reference) is a PHP backend that hooks to the existing Redis live game data cache of the ApexWS server, and exposes endpoints to get formatted data being returned to end users.

DGS Uplink

The core of the system remains ApexWS, the homemade websocket server that powers the existing DGS (“Detailed Game Stats”) feature on the Apex Legends Status website. As a quick reminder, this is a PHP websocket server that talks with the game client of the game admin. This allows the game client to send events live to the server (player damage, items pickup, kills, etc.) either in a JSON or a Protobuf format. In this case, DGS (more precisely the ApexWS server) is not directly handling client queries. By separating critical components, we ensure the stability of the tournament ecosystem: ApexWS is a critical part, and any crash could result in the loss of ongoing tournament data. However, Shaw isn’t as critical; if it crashes, it can quietly restart without significantly impacting the tournament or the end-users.

End user <-> Shaw Compute

First, the end user will make a request every second to the dgs-shaw-lb.apexlegendsstatus.com endpoint. This goes to Cloudflare first, that handles the Web Application Firewall, before being proxied to the Shaw Compute server, that will check if a game is in progress for the provided TournamentID and if Live Stats is allowed for this tournament by querying the DGS MySQL database. If none found, we return a waiting message to the user with a matching description message.

Redis Shaw Cache

If a game is currently running, a query will be sent to the Redis Shaw Cache (which isn’t the same cache as ApewWS) and check the latest update timestamp. If the latest data is newer than 1 second, we simply return the saved JSON payload to the user, saving precious computing resources and processing time. The overall HTTP request takes about 35ms in this specific case (client <-> server).

ApexWS query

If the data is older than 1 second, Shaw Compute will send a query to the Redis DGS Live cache for the corresponding internal ID. This bypasses the need to connect to ApexWS using websocket, which would have a much longer processing time (due to the websocket protocol & authentication on ApexWS). Here, we simply hit our Redis cache in a readonly mode. The data is then parsed by Shaw Compute, and the resulting payload is saved into Redis Shaw cache with a TTL of 1 second, before being sent to the end user. Doing so, the overall processing time is about 140ms.

In the end of the process, the JSON payload is parsed by the user browser (Javascript), and the DOM is updated accordingly.

Twitch channel status

To check the status of a Twitch Channel, a service simply calls the Twitch Helix API every minute, and updates the DGS MySQL database. If the channel is online, we also save the viewers count, which is also displayed on the DGS user interface. A quick mockup PHP code could be as follows:

$curl = curl_init();

curl_setopt_array($curl, array(

CURLOPT_URL => 'https://api.twitch.tv/helix/streams?'.$channelToCheck,

CURLOPT_RETURNTRANSFER => true,

CURLOPT_TIMEOUT => 0,

CURLOPT_FOLLOWLOCATION => true,

CURLOPT_CUSTOMREQUEST => 'GET',

CURLOPT_HTTPHEADER => array(

'Client-ID: Twitch app client ID',

'Authorization: Bearer '.$twitch_access_token

),

));

$response = json_decode(curl_exec($curl), true);

var_dump($response);

curl_close($curl);

Scaling & current limitations

In its current state, Shaw is able to handle about ~2000 concurrent requests (with a 1 second interval) on a single server. The main bottleneck isn’t computing power as the caching does a pretty good job for this, but mostly bandwidth. Bandwidth is expensive.

It would however not be hard to scale the current system, as it was made with this in mind. Depending on the success of the feature, more processing power could be added, but will come to the question of funding: DGS is entirely free to use in its current state, and if this feature were to be used for a tournament of the size of ALGS for example, it wouldn’t be possible without external funding.

There are also some easy workaround to mitigate this to some extent: reducing the payload size by optimizing it, or just increasing the delay between each HTTP calls to Shaw compute.

In the end, I believe this is a good proof of concept to show what could be made to enhance the Apex Legends viewing experience on the esports side of things, and I really hope we’ll see this kind of feature for ALGS at some point.