Devblog #2: Homelab review (ISP router replacement, and cool tech)

A review of the homelab, ranging from ISP router replacement to hardware review

1500 Words

2024-03-05 21:00 +0000 [Modified : 2024-03-05 22:00 +0000]

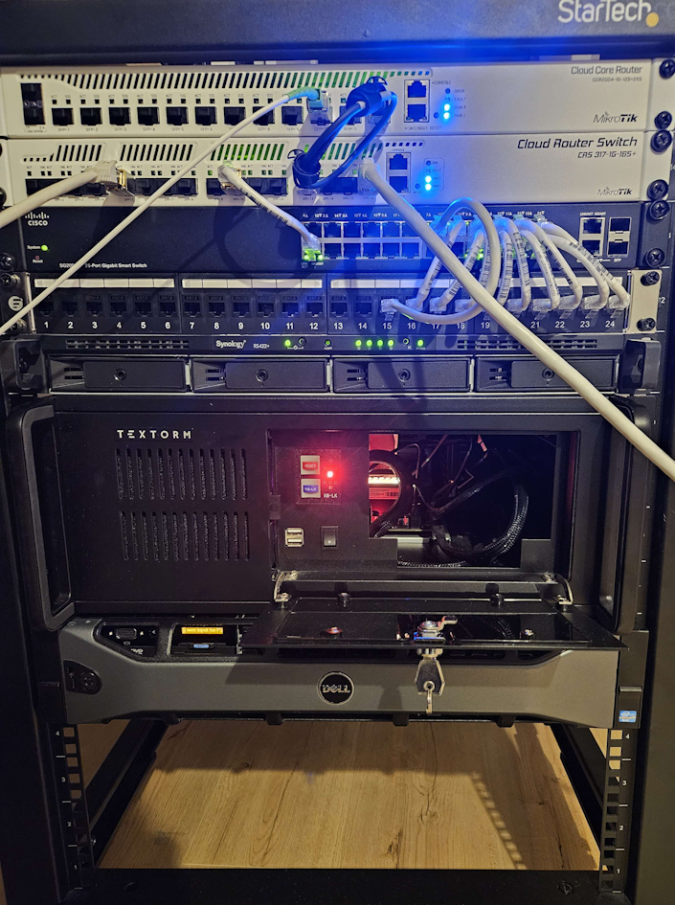

This is where Apex Legends Status started: inside a homelab. It started as single Raspberry PI, and is now a server rack with a few appliances. There is a lot of cool tech behind this never-ending project :) The most important part was it being quiet, as it is standing in my living room, while also being powerful enough to run everything I need with enough headroom for the future. It’s not always easy to manage, as things go wrong at random interval, and you’re the only one dealing with hardware/software issues. Yet, this is in my opinion the best way of learning a lot of things in a wide range of domains: networking, sys admin, … Here is a quick overview from top to bottom!

Routing: Mikrotik CCR2004-1G-12S+2XS

Overview

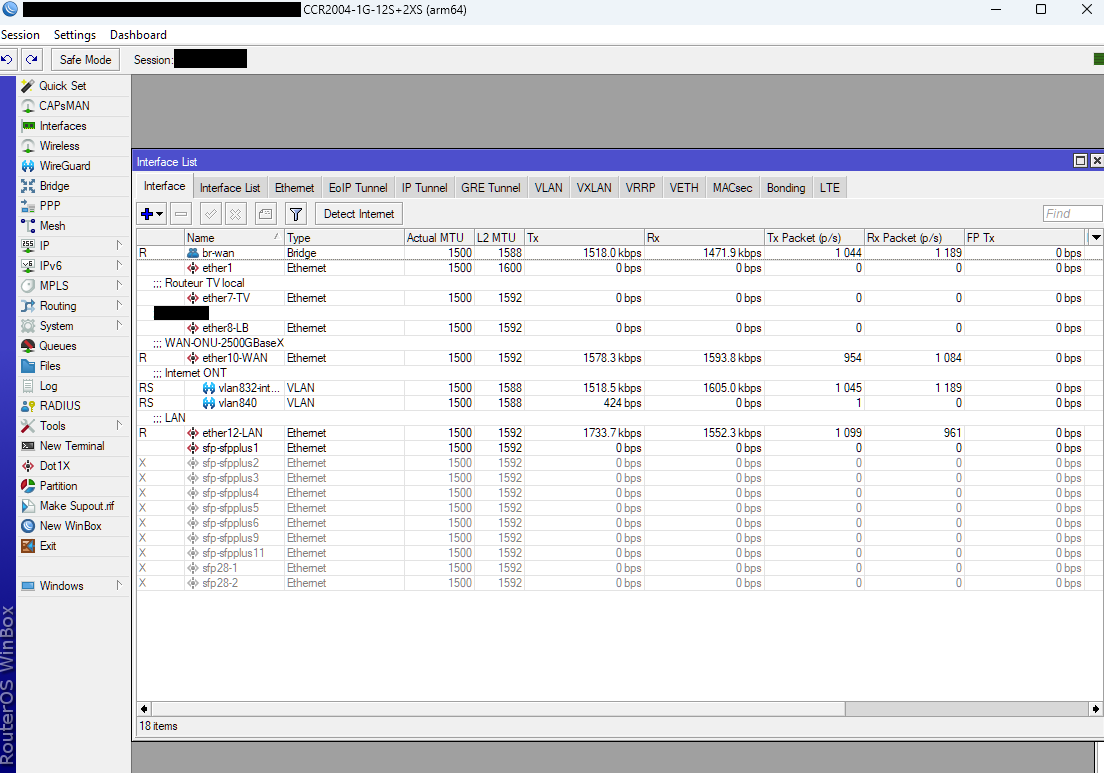

The most important piece of hardware: the router! This replaces the good old ISP router (which was not powerful enough… it was unfortunately not able to handle a large amount of concurrent packets - and why would you make something easy if you can make it harder? :)). In the past, this was a EdgeRouter 4, which was changed after I decided to go with a multi-gigabit ISP connection. The Mikrotik CCR2004 is a perfect router for this, with 12 SFP+ (10Gb/s) ports and 2 SFP28 ports (25Gb/s), having plenty of headroom for future upgrades, while also being cheaper than most high-end brands. A bit noisy at first, a fans replacement was needed. Fortunately, it’s really easy to open and swap the stock fans with a pair of Noctuas, making it dead silent. A thermal repaste was also necessary to cool this beast down, with a 20°C drop - which is really worth it and easy to do. This also acts as the firewall to/from the outside world, only keeping open ports for services needing it. Great thing from this, it barely goes above 10% CPU usage when having high traffic!

ISP Router replacement - the “fun” part

My main requirements when chosing this router was it to handle 10Gb/s, but also being able to put my ISP router back in its cardboard box. French ISP have a good history of using non-standard protocols to authenticate their clients, a fully customizable router was necessary for this. We’re lucky to have a few people who worked on reverse-engineering the auth flow, allowing people to tinker around and avoid using their ISP router by setting the correct DHCP client parameters (basically, making your ISP believe it is their router trying to authenticate with the network), and some specific VLANs. The base configuration is quite straightforward, but it gets really hard when needing additional services, such as TV. If you want to dive into this, lafibre.info is a great resource to get started. It gets even funnier (not really) when having to replace the ONT: it involves reprogramming the optic to replace the serial number and its vendor ID, which can be done by using some FS.com optics. Doing all this gives way more liberty, while also being dependant on any ISP network changes (auth changes, etc.). It’s really beneficial in my case as I have a 2Gb/s plan with my ISP, which I would only be able to use up to 1Gb/s per port with their provided router.

Configuration can be done through the RouterOS CLI, or by using the old RouterOS GUI (Winbox). This is one of the main downsides of Mikrotik products, the software feels really old.

Core Switch: CRS 317-1G-16S+

An other great piece of Mikrotik hardware, with 16 SFP+ ports (10Gb/s). It handles all 1Gb/s+ switching needs, with a 10Gb/s DAC uplink to the router. After swapping fans and thermal paste for the same reasons as the latter, it also runs dead quiet. Unfortunately, SFP+ RJ45 adapters had to be used due to constraints on the connected devices (lack of compatible SFP ports, mostly). It’s really important when using such adapters to space those evenly, as those get REALLY hot when running. This can cause early failure, and most importantly make the fans ramp up to maximum when having heavy traffic! It’s not used as its full potential at all for now, not having any other 10Gb/s devices linked to it at this time. This however still allows me to use my full bandwith from my workstation, which only supports up to 2.5Gb/s - some improvement to be made there in the future, maybe? :-)

1Gb Switch: Cisco SG200

This is a really old piece of Cisco hardware with 24 gigabit ports - and it was also my first switch in my homelab, a few years ago! It still works wonderfully, and its main benefit is to be passively cooled. It’s connected to the core switch, and distributes network connectivity to all gigabit devices behind it, through a patch panel right below it. Small patch cables make it tidy, which is way different than the back of the rack!

NAS: Synology RS422+

Until I started putting all my stuff in a rack, I was running a desktop format NAS (DS418+). It was great, and I really enjoyed the DSM OS. I only use it for storage (no docker containers or anything running on it), so I upgraded to a simple RS422+ after moving to a rack. It consists of 48TB raw storage capacity, spread across 2 nodes in RAID 1 each. I hate deleting stuff, and tend to always keep storing more and more - this gives some headroom, but I’m not sure it will last long!

A first “production” server, Reese

Named after a Person of Interest character, this is my main homelab server where I throw most of my workload. This is encased in a 4U Textorm case (I absolutely do not recommend it, it’s a pain to work with its rails), using desktop-grade hardware. This is still plently of power to run things, while having the benefits of low noise levels with Noctuas fans. Its currently config is a Ryzen 9 5950X CPU (16 cores/32 threads, more than enough), 128GB of DDR4 RAM, a GT710 GPU for video output and a random set of SSDs/NVMEs for storage. Most important part of storage is a Intel Datacenter grade SATA SSD for boot/heavy write data, and a pair of Samung NVME SSD in RAID 1 for main storage. My main issue when using SSD is their write lifespan, as I’ve already destroyed a few SSD that way. The Intel one is specifically made for this kind of usage, with a high TBW. A typical consumer-grade SSD from this period would have a 400TB write lifespan, while the Intel one is at 1.2 PB - on top of all other data integrity features on such kind of datacenter-grade SSD. One of the main downside when using such hardware is not having any IPMI control (= controling your server when it is offline, allowing you to boot, stop, connect virtual medias etc. to it). The GPU output is connected to a PiKVM box that does all this. It allows to have a KVM for this server, and also control its ATX I/O which makes it perfect to remotely manage if necessary. This server handles a few of my side project, and still one non-critical ALS component: a Redis database used for leaderboards generation. Redis barely uses any CPU resources for this usage, but needs large amounts of RAM (~80GB as of now); this would be really expensive if hosted through a dedicated server provider or in the cloud. It also handles some other components, such as Opensearch/Logstash used for logs ingestion and management (which will be the next devblog subject!).

The lab server, Finch

Named after yet an other Person of Interest character, this is a Dell Poweredge R720 server with 2 Xeon CPUs (6 cores/12 threads total),128GB of RAM, and a few SAS drives running a Proxmox install. It’s a really power hungry server, that I’m only using when having to play around and learning new tech. It’s also quite noisy, but this old version of iDRAC allows you to control the fan speed manually through impitool, which make it barely audible when running while still having decent temps.

… the nasty part: UPS, PIs, and random stuff

Cable management is OK in the front of the rack, but it’s a whole other story when looking at its back. This is where all the random PIs and other domotic things are stored (temp sensors, light controls, some other secret stuff…) and the wifi AP. All the critical devices are plugged into a APC UPS that gives around 20mins of running time in case of a power outage, which has always been more than enough for now. When its battery runs low, it sends a signal to all running servers to make them shutdown gracefully, ensuring data integrity - and all servers are automatically restarting when the power comes back.

The best thing about this rack is that it still has 4U of empty spaces - what’s next? :-)